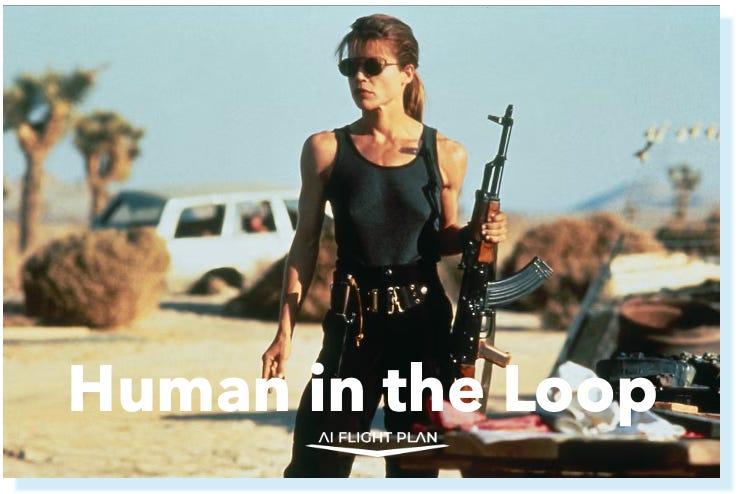

Pre-Flight Checklist: Human-in-the-Loop IS the Moat (Post #16 of 20)

Are you in command of what you're creating?

There is a deleted scene from Terminator 2 that almost no one knows about, or talks about. And it might be the most important one in understanding AI governance today.

Sarah and John Connor are in a dimly lit garage with the reprogrammed Terminator. It’s CPU has a “read-only” switch; a safety lock design by its creators at Skynet to stop it from learning or evolving on its own.

Can we reset the switch? ~John Connor, Terminator 2

John insists they remove the chip and flip the switch so the Terminator can learn and become more human.

Sarah hesitates, fearing the obvious risk. After a tense pause, she makes the decision to trust her son’s judgement. They reprogram the chip and reboot the Terminator.

That single act, the human decision to reprogram under supervision, and after acknowledging risks, is the perfect metaphor for how AI should evolve.

Not autonomously. Not recklessly. But, deliberately, with a human in the loop.

The future isn’t about whether AI can outthink us. It’s about where we remain in control of how it learns.

Automation can move faster than any team, but without human oversight, it can quickly go from accurate, to biased and from helpful to harmful, before anyone even notices.

In Gallagher’s 2025 “Attitudes to AI Adoption and Risk” survey, optimism around AI dropped from 82% to 68% in just one year, while the number of leaders calling AI a potential risk more than doubled.1

That’s not fear. It’s awareness. It signals a market waking up to the reality that unchecked automation introduces new kinds of risk and exposure.

At the same time, 85% of companies have already built job-protection and reskilling strategies into their AI Roadmaps.

The human-in-loop isn’t a bottleneck. It’s the foundation for trust.

If you aren’t familiar with “human-in-the-loop” and the term “moat” heard in AI tech circles, I shall explain briefly.

Human-in-the-Loop (HITL) means people stay part of the feedback loop. This means there are still humans reviewing, correcting or overriding AI decisions where it matters most.

The Moat is your defensible advantage. In AI, it’s not data or computer power. The Moat represents trust. And trust comes from human oversight (judgement, empathy, accountability) that no algorithm can replicate.

3-Step Human-in-the-Loop Framework

A structure to make oversight part of every system design. Not an afterthought.

Define Human Intervention Points.

Map where human judgement must apply and only where outcomes affect real people, money or safety. (Examples: employment or credit decisions, medical or legal recommendations, customer-impacting automation.)

If you can’t identify or name the checkpoint, then you’ve already lost control.

Build Accountability Loops.

Oversight without ownership is just noise.

Assign a Human Reviewer of Record for each critical AI process. Governance isn’t just bureaucracy, it’s traceability. Every automated action should lead back to a human name.

When systems fail, accountability must lead to a person, not a process.

Train the Humans, Too.

Oversight only works when reviewers understand what they’re doing.

Teach them to:

Recognize bias or hallucinations

Spot data-integrity issues

Know when to escalate or halt automation

Test Flight: The Learning Switch Audit

Objective: Identify where your organization has locked learning behind “read-only” logic.

Locate the Switches. List every AI system and mark which ones include human override or review.

Assess the Risk. Rank each by potential financial, ethical or reputational impact.

Flip One Switch. Add a human checkpoint or rollback option, then measure how confidence and outcomes change.

Mission Debrief

How did it go?

Did you find systems running un-protected by a Human-in-the-Loop? Were you able to identify human names for accountability?

The moment before Sarah Connor flips the CPU switch is the essence of AI governance. She didn’t hand control over to the machine. She decided when it was ready to learn.

AI governance isn’t about slowing innovation. It’s about steering it.

The moat isn’t built from firewalls or patents. The moat is built from judgement, empathy and deliberate human control.

Because the future won’t belong to the systems that learned the fastest. The future belongs to the humans wise enough to decide when to flip the switch.

Editorial Note: The deleted “CPU switch” scene from Terminator 2 (1991) captures one of the most profound metaphors for AI governance every filmed. It’s a reminder that progress without oversight is just acceleration without direction.

Everyone needs to read this!